Indexed in: ESCI, Scopus, PubMed,

PubMed Central, CAS, DOAJ, KCI

PubMed Central, CAS, DOAJ, KCI

FREE article processing charge

Articles

- Page Path

- HOME > J Yeungnam Med Sci > Volume 40(Suppl); 2023 > Article

-

Original article

Classification of dental implant systems using cloud-based deep learning algorithm: an experimental study -

Hyun Jun Kong

-

Journal of Yeungnam Medical Science 2023;40(Suppl):S29-S36.

DOI: https://doi.org/10.12701/jyms.2023.00465

Published online: July 26, 2023

Department of Prosthodontics, College of Dentistry, Wonkwang University, Iksan, Korea

- Corresponding author: Hyun Jun Kong, DDS, PhD Department of Prosthodontics, College of Dentistry, Wonkwang University, 895 Muwang-ro, Iksan 54538, Korea Tel: +82-63-859-2938 • Fax: +82-63-857-4002 • E-mail: zsfv@wku.ac.kr

• Received: May 4, 2023 • Revised: June 7, 2023 • Accepted: June 19, 2023

Copyright © 2023 Yeungnam University College of Medicine, Yeungnam University Institute of Medical Science

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License (http://creativecommons.org/licenses/by-nc/4.0/) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

-

Background

- This study aimed to evaluate the accuracy and clinical usability of implant system classification using automated machine learning on a Google Cloud platform.

-

Methods

- Four dental implant systems were selected: Osstem TSIII, Osstem USII, Biomet 3i Osseotite External, and Dentsply Sirona Xive. A total of 4,800 periapical radiographs (1,200 for each implant system) were collected and labeled based on electronic medical records. Regions of interest were manually cropped to 400×800 pixels, and all images were uploaded to Google Cloud storage. Approximately 80% of the images were used for training, 10% for validation, and 10% for testing. Google automated machine learning (AutoML) Vision automatically executed a neural architecture search technology to apply an appropriate algorithm to the uploaded data. A single-label image classification model was trained using AutoML. The performance of the model was evaluated in terms of accuracy, precision, recall, specificity, and F1 score.

-

Results

- The accuracy, precision, recall, specificity, and F1 score of the AutoML Vision model were 0.981, 0.963, 0.961, 0.985, and 0.962, respectively. Osstem TSIII had an accuracy of 100%. Osstem USII and 3i Osseotite External were most often confused in the confusion matrix.

-

Conclusion

- Deep learning-based AutoML on a cloud platform showed high accuracy in the classification of dental implant systems as a fine-tuned convolutional neural network. Higher-quality images from various implant systems will be required to improve the performance and clinical usability of the model.

- Successful application of osseointegrated implants is a major development in prosthetic dentistry. Hence, clinicians can provide multiple treatment options, even in cases where functional and esthetic rehabilitation is restricted [1]. Such implants have been reported to show high success and predictability regardless of the type [2-5]. In recent years, the continued development of dental implants has led to the global introduction of many types of implant systems [6,7]. Since the 2000s, hundreds of manufacturers have produced thousands of types of implant systems, and the number has been increasing [8-10].

- Implants consist of fixtures, abutments, suprastructures, and screws, the internal structures and compatible tools of which vary according to the manufacturer and system. When mechanical complications (e.g., screw loosening or fracture) occur, finding appropriate treatment can be difficult if the implant system cannot be correctly identified. Hence, the manufacturer’s proprietary abutment screws directly affect the successful maintenance of implants [11]. Therefore, the accurate identification and classification of implant systems is clinically important. Although periapical radiographs are commonly used to identify implant systems, it is difficult to accurately identify implants with similar shapes and structures because of the limitations of radiographs (e.g., distortion, haziness, and noise) [12,13]. Furthermore, without sufficient experience and knowledge of various implant systems, clinicians have a great deal of difficulty identifying them. Thus, there is a need for a better method to accurately classify implant systems without relying on clinician experience and knowledge.

- Artificial intelligence (AI) and deep learning methods have recently led to significant advancements in the medical field. In particular, convolutional neural networks (CNNs), a key model type of artificial neural networks, enable breakthroughs in research and analysis, including automated medical image identification and classification [14,15]. CNNs extract the features of images via input-data filtering, which is improved through feature enhancement, size reduction, and other alterations. These processes are repeated numerous times to obtain satisfactory results. CNNs are effective at recognizing and classifying patterns in images and can be trained without the need for human intervention to recognize specific patterns [16,17].

- In previous studies, CNN-based deep learning methods for implant system classification have been investigated [18-20]. Recent studies have shown high accuracy in multicenter datasets targeting various types of implants [21,22]. However, high-level programming skills and mathematical knowledge are necessary, based on the requirements for finely tuned CNN algorithms for specific images [23,24]. There are also practical limitations in the development and use of such programs for clinical applications, even if previously successful algorithms are used.

- Google Cloud automated machine learning (AutoML) Vision (Google LLC, Mountain View, CA, USA) is an open platform providing image-based AI model training, evaluation, and prediction using cloud-based computer resources. These systems are sufficiently high-end for model training and result evaluation, thereby saving enormous time and effort [25]. AutoML Vision allows researchers to train their own AI models without specialized expertise or resources. Data collection, preprocessing, optimization, and prediction are manually tuned and executed using traditional deep learning. However, apart from data collection, this method is automated with AutoML [26]. Therefore, this study aims to evaluate the accuracy and clinical usability of implant system classification using CNN-based AutoML on the Google Cloud platform.

Introduction

- Ethical statements: This study was approved by the Institutional Review Board (IRB) of Wonkwang University Dental Hospital (IRB No: WKDIRB202012-01), which waived the need for informed consent owing to the retrospective design of the study.

- 1. Data collection

- Periapical radiographic images were obtained from patients who underwent implant treatment at Wonkwang University Dental Hospital between January 2005 and December 2019. All images had a resolution of 1,440×1,920 pixels and were extracted as JPEG files using a picture archiving and communication system (INFINITT PACS; Infinitt, Seoul, Korea). The images were then classified based on electronic medical record systems and dental implant inventory records. Four commonly used dental implant systems were selected for this study: TSIII and USII (Osstem Implant Co. Ltd., Seoul, Korea), Osseotite External (Biomet 3i LLC, West Palm Beach, FL, USA), and Xive S plus (Dentsply Sirona, York, PA, USA). Details of the dental implant systems and the number of periapical images used are shown in Table 1. Periapical images of the lowest part of the fixture on the abutment, crown, or attachment were used. Owing to the projection angle and overlap of anatomical structures, images of very low quality, in which internal structures could not be identified with the naked eye, were excluded.

- 2. Preprocessing and model building

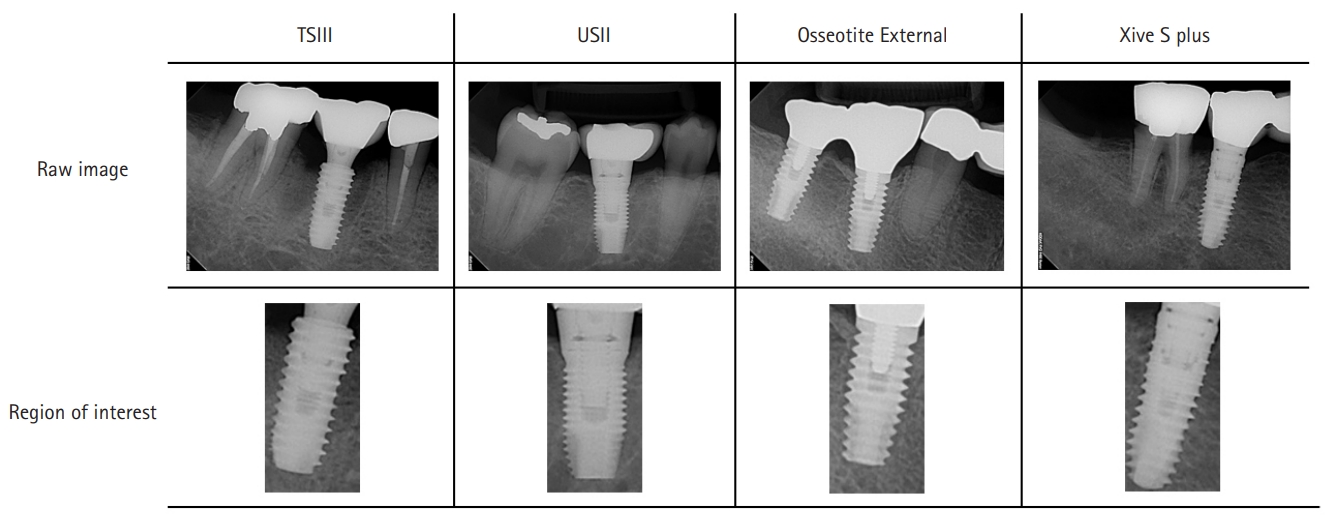

- The regions of interest were manually cropped to 400×800 pixels using an image editing program (PhotoScape; MOOII Tech, Seoul, Korea) (Fig. 1). Images of the maxillary implant were flipped vertically, and if the fixture was tilted >45°, the image was rotated parallel to the vertical axis.

- AutoML Vision automatically executes Google’s neural architecture search technology to apply an appropriate algorithm to uploaded data [26,27]. AutoML Vision automatically trains models based on data provided by the user. During the training process, AutoML Vision utilizes machine learning algorithms to learn the visual features and patterns of the images and generate the optimal model. Subsequently, the performance of the model is measured using evaluation metrics, such as accuracy and recall. The trained model can be used on various platforms such as mobile apps or web services through the application programming interface (API). This can generate prediction results for new images.

- To build an implant system classification model, the following processes were performed to prepare the images and configure the model using the AutoML Vision user interface. (1) A new dataset was created as a single-label classification. (2) A total of 4,800 cropped images were uploaded to the bucket in Google Cloud storage and labeled. (3) The images were randomly separated into three groups (80% for model learning, 10% for fine-tuning, and 10% for performance evaluation). (4) A model was trained for 32 node hours. Google Cloud’s n1-standard-8 machine with NVIDIA Tesla V100 was used.

- 3. Metrics for accuracy comparison

- AutoML Vision provides the area under the precision-recall curve as a metric for evaluating model performance (i.e., average precision). The closer the value is to 1.0, the more accurate the model is. For comparison with other deep learning methods, the accuracy, precision, recall, specificity, and F1 scores were calculated using the following equations: accuracy=TP+TN/TP+FP+FN+TN; precision=TP/TP+FP; recall=TP/TP+FN; specificity=TN/TN+FP; F1 score=2×(recall+precision)/recall+precision; where TP is true positive, TN is true negative, FP is false positive, and FN is false negative.

Methods

- 1. Model evaluation

- Google AutoML Vision performance was measured at confidence threshold levels from 0.0 to 1.0. The precision-recall curve for the model is shown in Fig. 2. The average precision score was 0.983. When the confidence threshold was 0.5, precision and recall were 0.963 and 0.961, respectively. The image classification performance of each implant system tested in this study is presented in Table 2.

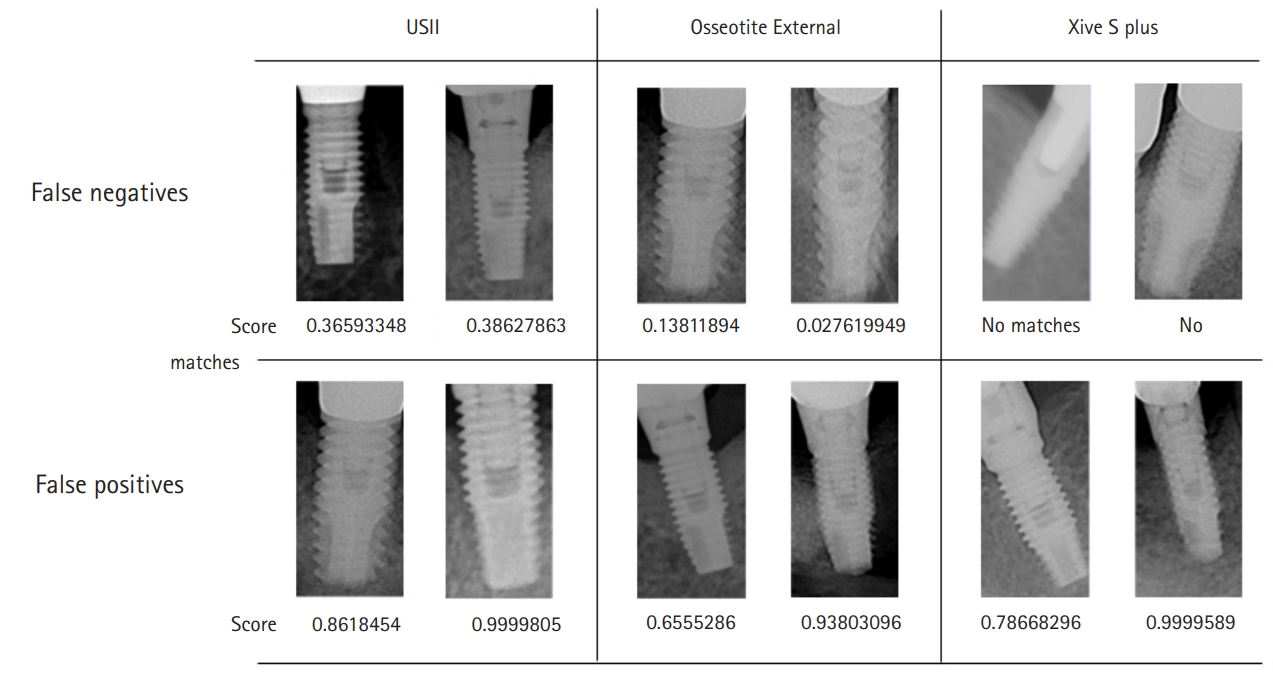

- The confusion matrix shows how often the model classified each label correctly, and the labels that were most often confused with that label (Fig. 3). Examples of the FNs and FPs for each implant system are shown in Fig. 4. Osstem TSIII had an accuracy of 100%, and there were no FNs or FPs.

- 2. Model cost

- The monetary charges for AutoML Vision consisted of model learning and deployment costs. The charge rate for learning the image classification model was $3.15 per node hour. Each node corresponded to the n1-standard-8 machine. The charge rate for model deployment online was $1.25 per node hour. Table 3 shows Google’s current charge rates and the total cost of the present research model.

Results

- This study evaluated how accurately dental implant systems could be classified using the AutoML model available on the Google Cloud platform. The results showed that the CNN-based AutoML model classified the implant systems with a high level of accuracy. These results suggest that the AI model may be effective for diagnosis and classification in other fields of dentistry.

- Using AutoML Vision, researchers can build models using Google’s latest machine learning technology and deploy them on mobile applications and websites. Important advantages of AutoML are its simplicity and ease of use. As an AutoML platform, users only need to collect and label images for analysis. Therefore, deep learning can be easily executed without high-specification equipment or expertise in AI programming. In a previous study, high accuracy was reported when an automated deep learning software was used for 27 types of implants [28]. However, deep learning software has the disadvantages of being expensive, being difficult to share and use by multiple people, and requiring high-performance computers.

- Cloud computing is an online environment where information technology-related functions such as data storage, networking, and applications can be accessed from the Internet. Cloud computing offers the advantage of accessing high-performance computing resources and storage facilities to provide flexibility and scalability. Using cloud computing, individuals and businesses can reduce the costs of maintaining computer systems, installing servers, and purchasing software, leading to increased productivity [29]. However, there are ethical issues associated with uploading medical information to the cloud. Medical information stored in the cloud carries the risk of hacking or data leakage. There is also the risk of unauthorized access by cloud providers, network administrators, and other users. In this study, patient information was completely removed while cropping the periapical radiographs, and only the implant images were uploaded to the cloud system. However, additional discussions and consensus regarding cloud systems and medical information are required.

- This study used Google Cloud, a leading global cloud service. A unique advantage of implant identification using a cloud-based system is that collaboration with various implant users is possible. Because of their nature, a wide variety of implants are used worldwide. Therefore, this collaboration will help develop a clinically usable implant identification model. Additionally, it is possible to develop software or applications using the APIs provided by the cloud. Finally, when a clinician encounters an implant that must be identified, the implant can be tested by accessing the cloud system and uploading an image. This is also cost-effective [30].

- The accuracy (0.981) and F1 scores (0.962) in this study using AutoML were high, similar to those in the studies by Kim et al. [18] and Lee and Jeong [31] using various pretrained networks (SqueezeNet, GoogLeNet, ResNet-18, mobileNetV2, ResNet-50, and Inception v3). These results confirm that cloud-based AutoML and fine-tuned CNN algorithms are effective in classifying dental implant systems.

- In the present study, Osstem TSIII exhibited a very high level of accuracy owing to its internal connection and cutting-edge design. Dentsply Sirona Xive also uses an internal connection but has a platform-matched design, which differs from that of Osstem TSIII and is more similar to an external type [32,33]. Osstem USII and 3i Osseotite External were most often confused in the confusion matrix. This was because they had the same external connection type, and their cutting-edge design was similar with respect to the periapical image. Nevertheless, Osstem USII and 3i Osseotite External showed accuracies of 0.969 and 0.965, respectively, indicating that the CNN algorithm-based AutoML model can classify implants with similar designs.

- The analysis of FNs and FPs revealed that if the horizontal projection angle of the image was large for maxillary implants, the internal structure was relatively unclear. Hence, the accuracy was reduced. Additionally, in the FN examples of Dentsply Sirona Xive, the image recognition performance was significantly affected if the density and contrast were inappropriate or if the prosthetic parts of the adjacent teeth overlapped. In addition, after analyzing the FN examples of 3i Osseotite and FP examples of Dentsply Sirona Xive, although clearly identified visually, the algorithm gave a very low or very high score, resulting in incorrect conclusions. The quantified analysis provided by AutoML Vision for the failed analyses will be helpful for fine-tuning the algorithm for implant identification.

- This study has several limitations that should be considered. First, good-quality periapical radiographic images were selected by the prosthodontist and included in the datasets. The use of real clinical images that are not standardized could potentially reduce the accuracy of the model. Second, the types of implants used in this study were limited; in particular, internal and external implants were easy to distinguish, which may have negatively affected confidence in the accuracy of the study. Furthermore, the present study used AutoML. Thus, there was a lack of detailed information on exactly which algorithms Google utilized on the cloud platform.

- Further studies are needed to collect images of implant systems produced at multiple institutions and dental hospitals. High diversity and quality of the dataset will improve the accuracy and universality of the model. In addition, the model should classify diameters and lengths as well as implant systems because the compatibility of connections varies depending on the size [34]. The development of deep learning algorithms that can accurately classify the diameter and length of implants may increase their clinical applicability.

- We found that deep learning-based AutoML on a cloud platform is useful for the classification of dental implant systems with high accuracy. This was achieved through collaboration based on an online cloud system. Higher-quality implant images from various institutions and dental hospitals are required to improve the performance and clinical usability of this model.

Discussion

-

Conflicts of interest

No potential conflict of interest relevant to this article was reported.

-

Funding

This study was supported by Wonkwang University in 2023.

Notes

Fig. 1.Periapical radiographs and cropped images of the four types of selected implants. TSIII and USII: Osstem Implant Co. Ltd., Seoul, Korea; Osseotite External: Biomet 3i LLC, West Palm Beach, FL, USA; Xive S plus: Dentsply Sirona, York, PA, USA.

Fig. 2.Precision and recall curves on confidence threshold. (A) Precision-recall curve. It shows the trade-off between precision and recall. (B) Precision-recall by confidence threshold. It shows how model performs on the top-scored label along the full range of confidence threshold values.

Fig. 3.Confusion matrix for multiclass classification using Google AutoML Vision user interface. TSIII and USII: Osstem Implant Co. Ltd., Seoul, Korea; Osseotite External: Biomet 3i LLC, West Palm Beach, FL, USA; Xive S plus: Dentsply Sirona, York, PA, USA; Google AutoML Vision: Google LLC, Mountain View, CA, USA.

Fig. 4.Examples of false negatives and false positives. USII: Osstem Implant Co. Ltd., Seoul, Korea; Osseotite External: Biomet 3i LLC, West Palm Beach, FL, USA; Xive S plus: Dentsply Sirona, York, PA, USA.

Table 1.Dental implant systems and the number of images used for model learning

Table 2.Model performance of implant system classification

Table 3.Total cost for model training and deployment on Google AutoML Vision platform

- 1. Steigenga JT, al-Shammari KF, Nociti FH, Misch CE, Wang HL. Dental implant design and its relationship to long-term implant success. Implant Dent 2003;12:306–17.ArticlePubMed

- 2. Adell R, Lekholm U, Rockler B, Brånemark PI. A 15-year study of osseointegrated implants in the treatment of the edentulous jaw. Int J Oral Surg 1981;10:387–416.ArticlePubMed

- 3. van Steenberghe D, Lekholm U, Bolender C, Folmer T, Henry P, Herrmann I, et al. Applicability of osseointegrated oral implants in the rehabilitation of partial edentulism: a prospective multicenter study on 558 fixtures. Int J Oral Maxillofac Implants 1990;5:272–81.PubMed

- 4. Buser D, Weber HP, Bragger U, Balsiger C. Tissue integration of one-stage ITI implants: 3-year results of a longitudinal study with Hollow-Cylinder and Hollow-Screw implants. Int J Oral Maxillofac Implants 1991;6:405–12.ArticlePubMed

- 5. Adell R, Eriksson B, Lekholm U, Brånemark PI, Jemt T. Long-term follow-up study of osseointegrated implants in the treatment of totally edentulous jaws. Int J Oral Maxillofac Implants 1990;5:347–59.PubMed

- 6. Al-Johany SS, Al Amri MD, Alsaeed S, Alalola B. Dental implant length and diameter: a proposed classification scheme. J Prosthodont 2017;26:252–60.ArticlePubMedPDF

- 7. Michelinakis G, Sharrock A, Barclay CW. Identification of dental implants through the use of Implant Recognition Software (IRS). Int Dent J 2006;56:203–8.ArticlePubMed

- 8. Jokstad A, Braegger U, Brunski JB, Carr AB, Naert I, Wennerberg A. Quality of dental implants. Int Dent J 2003;53(6 Suppl 2):409–43.ArticlePubMed

- 9. Oshida Y, Tuna EB, Aktören O, Gençay K. Dental implant systems. Int J Mol Sci 2010;11:1580–678.ArticlePubMedPMC

- 10. Jokstad A, Ganeles J. Systematic review of clinical and patient-reported outcomes following oral rehabilitation on dental implants with a tapered compared to a non-tapered implant design. Clin Oral Implants Res 2018;29(Suppl 16):41–54.ArticlePubMedPDF

- 11. Lee KY, Shin KS, Jung JH, Cho HW, Kwon KH, Kim YL. Clinical study on screw loosening in dental implant prostheses: a 6-year retrospective study. J Korean Assoc Oral Maxillofac Surg 2020;46:133–42.ArticlePubMedPMC

- 12. Dudhia R, Monsour PA, Savage NW, Wilson RJ. Accuracy of angular measurements and assessment of distortion in the mandibular third molar region on panoramic radiographs. Oral Surg Oral Med Oral Pathol Oral Radiol Endod 2011;111:508–16.ArticlePubMed

- 13. Kayal RA. Distortion of digital panoramic radiographs used for implant site assessment. J Orthod Sci 2016;5:117–20.ArticlePubMedPMC

- 14. Golden JA. Deep learning algorithms for detection of lymph node metastases from breast cancer: helping artificial intelligence be seen. JAMA 2017;318:2184–6.ArticlePubMed

- 15. Yasaka K, Akai H, Kunimatsu A, Kiryu S, Abe O. Deep learning with convolutional neural network in radiology. Jpn J Radiol 2018;36:257–72.ArticlePubMedPDF

- 16. Hwang JJ, Jung YH, Cho BH, Heo MS. An overview of deep learning in the field of dentistry. Imaging Sci Dent 2019;49:1–7.ArticlePubMedPMCPDF

- 17. Casalegno F, Newton T, Daher R, Abdelaziz M, Lodi-Rizzini A, Schürmann F, et al. Caries detection with near-infrared transillumination using deep learning. J Dent Res 2019;98:1227–33.ArticlePubMedPMCPDF

- 18. Kim JE, Nam NE, Shim JS, Jung YH, Cho BH, Hwang JJ. Transfer learning via deep neural networks for implant fixture system classification using periapical radiographs. J Clin Med 2020;9:1117.ArticlePubMedPMC

- 19. Sukegawa S, Yoshii K, Hara T, Yamashita K, Nakano K, Yamamoto N, et al. Deep neural networks for dental implant system classification. Biomolecules 2020;10:984.ArticlePubMedPMC

- 20. Takahashi T, Nozaki K, Gonda T, Mameno T, Wada M, Ikebe K. Identification of dental implants using deep learning-pilot study. Int J Implant Dent 2020;6:53.ArticlePubMedPMCPDF

- 21. Park W, Schwendicke F, Krois J, Huh JK, Lee JH. Identification of dental implant systems using a large-scale multicenter data set. J Dent Res 2023;102:727–33.ArticlePubMedPDF

- 22. Kong HJ, Eom SH, Yoo JY, Lee JH. Identification of 130 dental implant types using ensemble deep learning. int j oral maxillofac implants 2023;38:150–6.ArticlePubMed

- 23. Deo RC. Machine learning in medicine. Circulation 2015;132:1920–30.ArticlePubMedPMC

- 24. Stead WW. Clinical implications and challenges of artificial intelligence and deep learning. JAMA 2018;320:1107–8.ArticlePubMed

- 25. Kim IK, Lee K, Park JH, Baek J, Lee WK. Classification of pachychoroid disease on ultrawide-field indocyanine green angiography using auto-machine learning platform. Br J Ophthalmol 2021;105:856–61.ArticlePubMed

- 26. Zeng Y, Zhang J. A machine learning model for detecting invasive ductal carcinoma with Google Cloud AutoML Vision. Comput Biol Med 2020;122:103861.ArticlePubMed

- 27. Faes L, Wagner SK, Fu DJ, Liu X, Korot E, Ledsam JR, et al. Automated deep learning design for medical image classification by health-care professionals with no coding experience: a feasibility study. Lancet Digit Health 2019;1:e232–42.ArticlePubMed

- 28. Park W, Huh JK, Lee JH. Automated deep learning for classification of dental implant radiographs using a large multi-center dataset. Sci Rep 2023;13:4862.ArticlePubMedPMCPDF

- 29. Griebel L, Prokosch HU, Köpcke F, Toddenroth D, Christoph J, Leb I, et al. A scoping review of cloud computing in healthcare. BMC Med Inform Decis Mak 2015;15:17.ArticlePubMedPMCPDF

- 30. Li Z, Guo H, Wang WM, Guan Y, Barenji AV, Huang GQ, et al. A blockchain and AutoML approach for open and automated customer service. IEEE Trans Ind Inform 2019;15:3642–51.Article

- 31. Lee JH, Jeong SN. Efficacy of deep convolutional neural network algorithm for the identification and classification of dental implant systems, using panoramic and periapical radiographs: a pilot study. Medicine (Baltimore) 2020;99:e20787.ArticlePubMedPMC

- 32. Wu S, Wu X, Shrestha R, Lin J, Feng Z, Liu Y, et al. Clinical and radiologic outcomes of submerged and nonsubmerged bone-level implants with internal hexagonal connections in immediate implantation: a 5-year retrospective study. J Prosthodont 2018;27:101–7.ArticlePubMedPDF

- 33. Uraz A, Isler SC, Cula S, Tunc S, Yalim M, Cetiner D. Platform-switched implants vs platform-matched implants placed in different implant-abutment interface positions: a prospective randomized clinical and microbiological study. Clin Implant Dent Relat Res 2020;22:59–68.ArticlePubMedPDF

- 34. Karl M, Irastorza-Landa A. In vitro characterization of original and nonoriginal implant abutments. Int J Oral Maxillofac Implants 2018;33:1229–39.ArticlePubMed

References

Figure & Data

References

Citations

Citations to this article as recorded by

- Accuracy of Artificial Intelligence Models in Dental Implant Fixture Identification and Classification from Radiographs: A Systematic Review

Wael I. Ibraheem

Diagnostics.2024; 14(8): 806. CrossRef - A Comparative Analysis of Deep Learning-Based Approaches for Classifying Dental Implants Decision Support System

Mohammed A. H. Lubbad, Ikbal Leblebicioglu Kurtulus, Dervis Karaboga, Kerem Kilic, Alper Basturk, Bahriye Akay, Ozkan Ufuk Nalbantoglu, Ozden Melis Durmaz Yilmaz, Mustafa Ayata, Serkan Yilmaz, Ishak Pacal

Journal of Imaging Informatics in Medicine.2024;[Epub] CrossRef - Advancements in artificial intelligence algorithms for dental implant identification: A systematic review with meta-analysis

Ahmed Yaseen Alqutaibi, Radhwan S. Algabri, Dina Elawady, Wafaa Ibrahim Ibrahim

The Journal of Prosthetic Dentistry.2023;[Epub] CrossRef

E-Submission

E-Submission Yeungnam University College of Medicine

Yeungnam University College of Medicine PubReader

PubReader ePub Link

ePub Link Cite

Cite